High-Volume Data Flow & Integration Assessment: 61862636363, 965315720, 3032561031, 289540745, 120106997, 451404290

In the realm of high-volume data flow, the integration assessment becomes paramount for organizations handling vast datasets. Unique identifiers, such as 61862636363 and 965315720, serve as critical links between disparate data sources. Their systematic evaluation reveals essential insights into data quality and integration challenges. This approach not only enhances operational performance but also informs strategic decision-making. The implications of these practices warrant further investigation into effective management techniques.

Understanding High-Volume Data Flow

High-volume data flow refers to the continuous and rapid movement of large datasets across various systems and platforms, necessitating robust mechanisms for effective management and integration.

This dynamic environment demands real-time analytics to derive insights promptly, ensuring informed decision-making.

Additionally, data scalability is crucial, allowing organizations to adapt to fluctuating data demands while maintaining performance and reliability across their infrastructure.

Importance of Data Integration Assessment

While organizations increasingly rely on vast datasets for strategic insights, the significance of conducting a thorough data integration assessment cannot be overstated.

This assessment identifies data quality issues and integration challenges that could hinder operational efficiency.

Case Studies: Analyzing Unique Identifiers

Unique identifiers serve as pivotal elements in the realm of data integration, facilitating the accurate linking of disparate datasets across various systems.

Through identifier analysis, organizations can assess data uniqueness, ensuring that each dataset maintains integrity and coherence.

Case studies reveal how effective management of unique identifiers enhances data interoperability, ultimately leading to improved decision-making and streamlined processes in high-volume environments.

Best Practices for Managing Data Integration

Effective management of data integration requires a systematic approach that prioritizes clarity and consistency across all datasets.

Adopting robust data governance frameworks ensures adherence to compliance and quality standards.

Utilizing advanced integration tools facilitates seamless connectivity and data flow, empowering organizations to harness data effectively.

Continuous monitoring and optimization of processes further enhance integration efficiency, fostering an environment conducive to innovation and strategic decision-making.

Conclusion

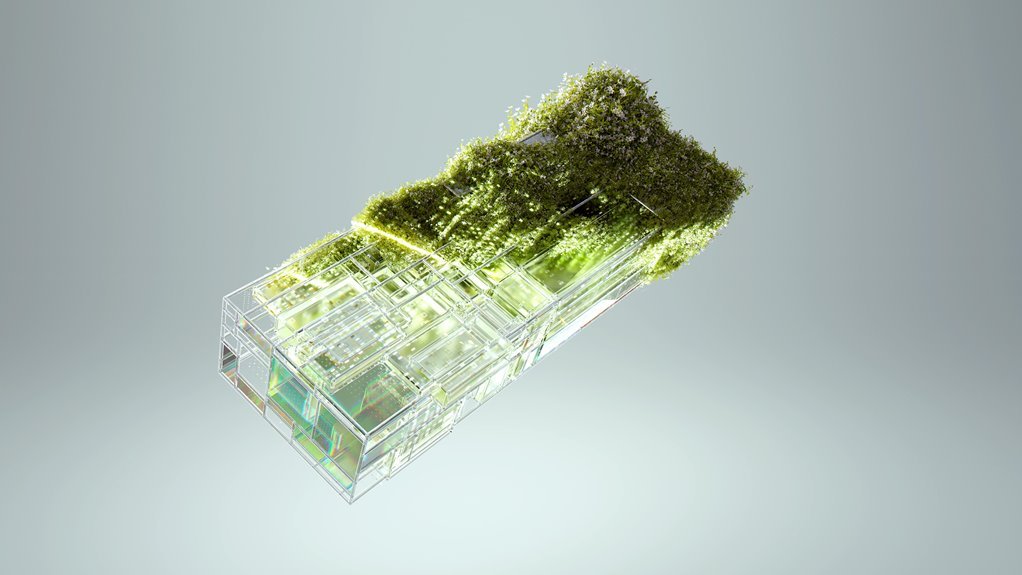

In conclusion, the assessment of high-volume data flow and the strategic management of unique identifiers function like the scaffolding of a building, supporting the structure of data integrity and coherence. By rigorously analyzing these identifiers, organizations can uncover potential data quality issues and streamline integration processes. This meticulous approach not only enhances operational performance but also empowers informed decision-making, ultimately fostering a robust data-driven environment that adapts to the dynamic landscape of contemporary data challenges.